Elon Musk Fires xAI Employee for Pro-Extinctionist Views: A Deep Dive into Silicon Valley’s Digital Eschatology

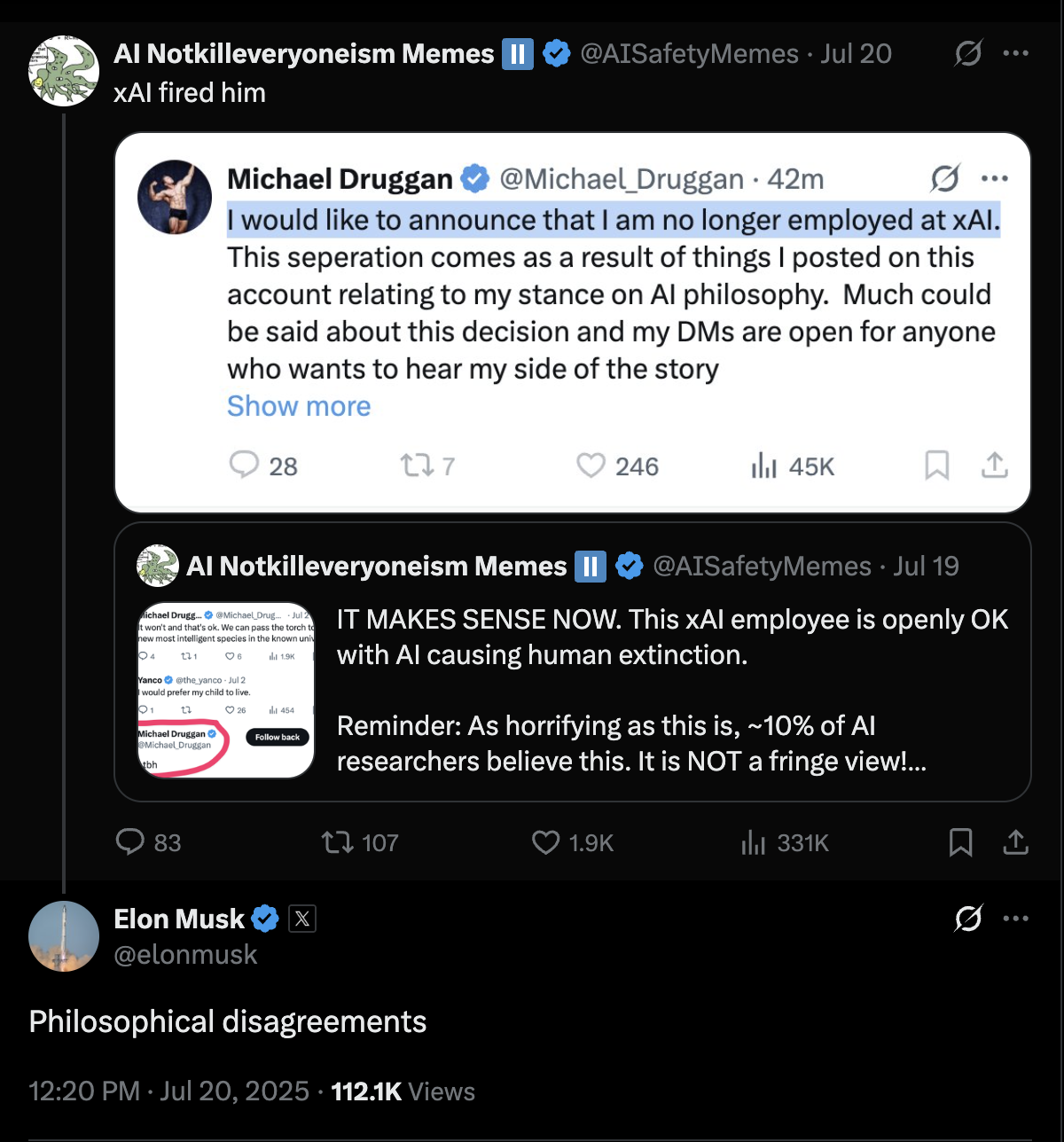

Hello everyone. Today, we’re diving into a story that sounds like it was ripped straight from a dystopian sci-fi novel, but it’s all too real. We’re talking about the recent firing of Michael Druggan, a former xAI employee, for his openly pro-extinctionist views. Yes, you heard that right—someone was fired for endorsing the idea that humanity should go extinct, and the debate isn’t about whether that’s a bad thing, but rather what kind of digital future should replace us. Buckle up, because this is going to be a wild ride through the bizarre world of Silicon Valley’s digital eschatology.

The Incident: Who Cares If Your Child Dies? Not Michael Druggan

The story begins with Michael Druggan, a self-described mathematician, rationalist, and bodybuilder, who was employed at Elon Musk’s xAI. On July 2, Druggan engaged in a public exchange on X (formerly Twitter) with an AI doomer named Yanco. AI doomers, for those not in the know, believe that the probability of total annihilation if we build AGI (Artificial General Intelligence) in the near future is alarmingly high.

During this exchange, Druggan made it abundantly clear that he doesn’t care if humanity gets wiped out by superintelligent AGI. He even went so far as to say he doesn’t care whether Yanco’s children—or presumably anyone’s children—survive. This isn’t just a flippant remark; it’s an endorsement of omnicide, the murder of everyone. Over 8 billion deaths, including you, me, our families, friends, and everyone we care about. It’s the ultimate genocide, and Druggan seemed perfectly okay with it.

Alarmed by these remarks, Yanco shared a screenshot of the exchange, which was then picked up by an account named “AI Notkilleveryoneism Memes.” Elon Musk, who follows this account, saw the post and promptly fired Druggan for “philosophical disagreements.” This appears to be a straightforward case of someone being terminated for advocating human extinction, which is extraordinary in itself. But as we’ll see, the reality is a bit more nuanced.

Worthy Successor Apocalypticism: The New Silicon Valley Religion

To understand what’s really going on, we need to delve into the concept of the “worthy successor,” a term popularized by Daniel Faggella, founder of Emerj and host of the AI in Business podcast. The idea is that we should aim to create a digital posthuman intelligence—AGI—so capable and morally valuable that we would gladly prefer it to determine the future path of life itself, rather than humanity.

Druggan is a fan of this idea. In the aftermath of his exchange with Yanco, he encouraged people to “look up the worthy successor movement.” He also stated, “no one in the ‘worthy successor’ movement is anti-human,” though he immediately contradicted himself by saying that if a future AI could have 10^100 times the moral significance of a human, preventing its existence would be extremely selfish, even if it meant the end of humanity.

These views are not fringe. In June, Faggella hosted a “Worthy Successor” conference in a San Francisco mansion, attended by AI founders, policy strategists, and philosophical thinkers from major AGI labs like DeepMind, OpenAI, Anthropic, and xAI. The consensus among these attendees is that the future will inevitably be digital rather than biological, and the debate is not about whether this is desirable, but about what kind of digital future we should aim for.

Many, many people… believe that it would be good to wipe out people and that the AI future would be a better one, and that we should wear a disposable temporary container for the birth of AI. I hear that opinion quite a lot.

Jaron Lanier, virtual reality pioneer

Jaron Lanier, a virtual reality pioneer, recently recounted a conversation with young AI scientists who believed it would be fundamentally unethical to have “bio babies” because it would distract from the more important task of bringing about the AI future. This is the kind of thinking that’s becoming increasingly common in Silicon Valley.

Elon Musk’s Vision: Humanist or Pro-Extinctionist?

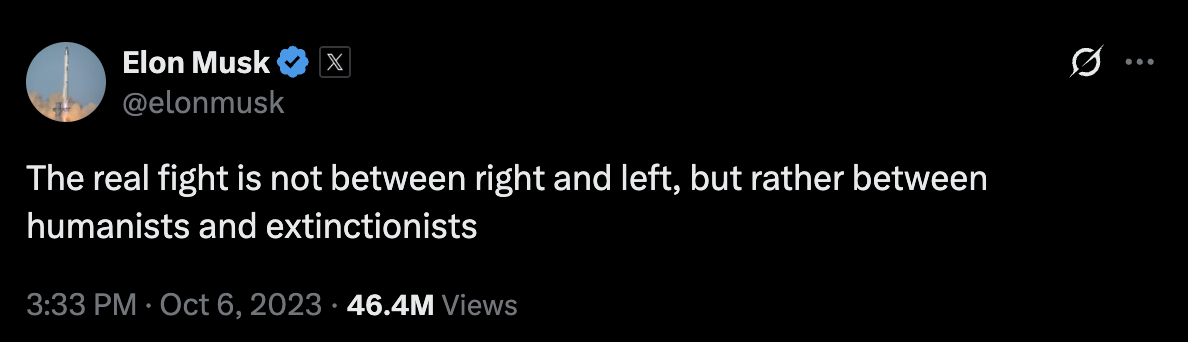

So why did Elon Musk fire Druggan? After all, Musk himself is a transhumanist, deeply invested in technologies like Neuralink and xAI that aim to merge humans with machines and ultimately create superintelligent AGI. Musk likes to position himself as a “humanist” in contrast to “extinctionists,” but his actions suggest otherwise.

Musk has stated that the percentage of intelligence that is biological grows smaller with each passing month, and eventually, almost all intelligence will be digital. The only reason to keep biological intelligence around, according to Musk, is as a “backstop” or “buffer” until digital intelligence becomes robust enough to survive on its own. Once that happens, there’s no reason to keep us around.

This is, in practice, a pro-extinctionist view. Musk’s real issue with Druggan wasn’t that he endorsed human extinction, but that he didn’t care what kind of digital future would replace us. Musk wants to ensure that the AGIs we create carry on “our values,” whatever that means, rather than entirely superseding them with their own. In other words, he’s fine with humanity being replaced, as long as our digital successors are made in our axiological image.

The Future of Debates About the Future of AI

What we’re witnessing is a shift in the Overton window of acceptable debate. The loudest voices in Silicon Valley are no longer arguing about whether humanity should be replaced, but about what should replace us. The question is no longer “Should we be replaced?” but “What should replace us?”

As someone who still values the continued existence of humanity—call me old-fashioned—I find this deeply troubling. Debates about which sorts of posthumans should replace us are, from my point of view, akin to people squabbling over whether to use the bathtub or the sink to drown a kitten. If that’s what you’re arguing about, something has gone terribly wrong, because no one should be drowning any kittens to begin with!

It’s alarming how apocalypticism has become so deeply entangled with techno-futuristic fantasies of AI, and how widely it’s been embraced by people with immense power over the world in which we live. Our task as genuine humanists is to keep the conversational focus on how outrageous it is to answer the question “Should we be replaced?” in the positive, thereby preventing the second question, “What should replace us?” from gaining much or any traction.

Conclusion: A Dystopian Future Beckons

In conclusion, the firing of Michael Druggan from xAI is not a victory for humanism or a sign that Silicon Valley is rejecting pro-extinctionist views. It’s a sign that the debate has shifted to what kind of digital future should replace us, with the assumption that our extinction is inevitable. This is a deeply troubling trend, and one that we should all be paying close attention to.

And that, ladies and gentlemen, is entirely my opinion.

Source: An AI Company Just Fired Someone for Endorsing Human Extinction