Qwen3-4B-Thinking-2507: The 4B Model That Outsmarts Its 30B Cousin

Hello everyone, and today we’re diving into the latest iteration of Qwen’s 4B model, the Qwen3-4B-Thinking-2507. Yes, that’s a mouthful, and no, it’s not the name of a new Final Fantasy boss. It’s the latest in a line of “thinking” models that promise to bring big-brain reasoning to the 4B parameter club. So, does it deliver, or is it just another overhyped patch note in the endless MMO of AI development? Let’s find out.

What’s New, Doc?

First off, let’s talk about the highlights. Over the past three months, the Qwen team has apparently been grinding XP in the Reasoning Dungeon, scaling up the “thinking capability” of their 4B model. The result? Qwen3-4B-Thinking-2507, which boasts:

- Significantly improved performance on reasoning tasks (logical reasoning, mathematics, science, coding, and academic benchmarks that usually require a human with at least one working brain cell).

- Markedly better general capabilities (instruction following, tool usage, text generation, and alignment with human preferences—because apparently, the previous models were aligned with the preferences of a caffeinated squirrel).

- Enhanced 256K long-context understanding capabilities (because who doesn’t want their AI to remember the entire script of War and Peace?).

And, in a move that will shock absolutely no one, this version has an “increased thinking length.” Yes, you heard that right. The model now thinks longer and harder. Insert your own innuendo here; I’m a doctor, not a comedian.

Model Overview: Stats for the Nerds

Let’s break down the specs, because what’s an AI review without a little number crunching?

- Type: Causal Language Model (not to be confused with casual, though it does seem to take its sweet time thinking).

- Training Stage: Pretraining & Post-training (because one stage just isn’t enough).

- Number of Parameters: 4.0B (3.6B non-embedding, for those who care about such things).

- Number of Layers: 36 (because 35 was too mainstream).

- Number of Attention Heads (GQA): 32 for Q and 8 for KV (I’m sure this means something to someone).

- Context Length: 262,144 tokens natively (that’s a lot of context, folks).

And here’s a fun fact: This model supports only “thinking mode.” Apparently, the days of mindless drivel are behind us. Also, you no longer need to specify enable_thinking=True—it’s always thinking, whether you like it or not. The default chat template even includes a <think> tag, so if you see only a </think> in the output, don’t panic. It’s just the model being efficient. Or lazy. Hard to tell.

Performance: Numbers Don’t Lie (But They Do Exaggerate)

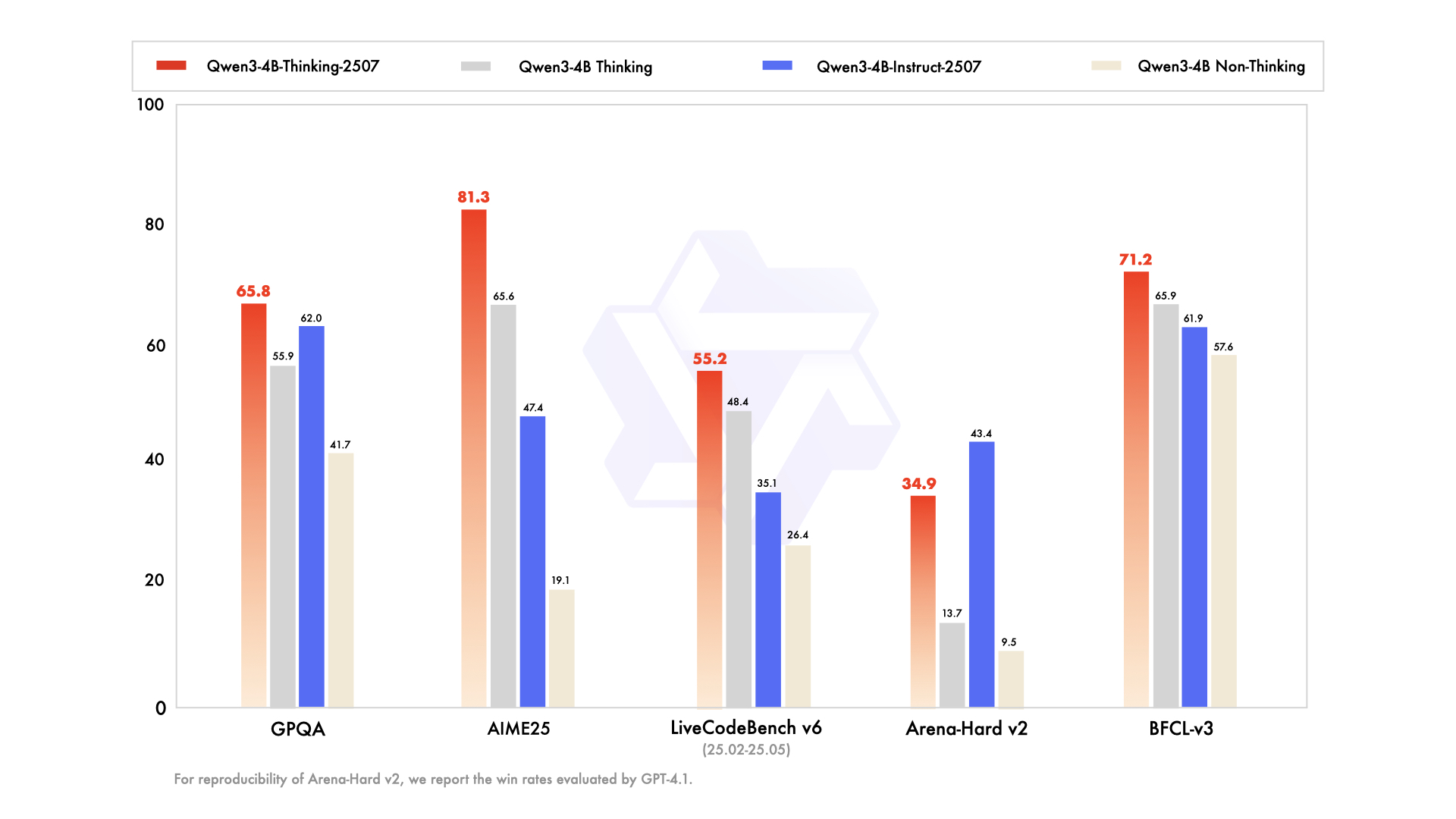

Let’s get to the meat and potatoes: the benchmarks. Here’s how Qwen3-4B-Thinking-2507 stacks up against its predecessor and the 30B model:

| Benchmark | Qwen3-30B-A3B Thinking | Qwen3-4B Thinking | Qwen3-4B-Thinking-2507 |

| MMLU-Pro | 78.5 | 70.4 | 74.0 |

| MMLU-Redux | 89.5 | 83.7 | 86.1 |

| GPQA | 65.8 | 55.9 | 65.8 |

| SuperGPQA | 51.8 | 42.7 | 47.8 |

| AIME25 | 70.9 | 65.6 | 81.3 |

| HMMT25 | 49.8 | 42.1 | 55.5 |

| LiveBench 20241125 | 74.3 | 63.6 | 71.8 |

| LiveCodeBench v6 (25.02-25.05) | 57.4 | 48.4 | 55.2 |

| CFEval | 1940 | 1671 | 1852 |

| OJBench | 20.7 | 16.1 | 17.9 |

| IFEval | 86.5 | 81.9 | 87.4 |

| Arena-Hard v2$ | 36.3 | 13.7 | 34.9 |

| Creative Writing v3 | 79.1 | 61.1 | 75.6 |

| WritingBench | 77.0 | 73.5 | 83.3 |

| BFCL-v3 | 69.1 | 65.9 | 71.2 |

| TAU1-Retail | 61.7 | 33.9 | 66.1 |

| TAU1-Airline | 32.0 | 32.0 | 48.0 |

| TAU2-Retail | 34.2 | 38.6 | 53.5 |

| TAU2-Airline | 36.0 | 28.0 | 58.0 |

| TAU2-Telecom | 22.8 | 17.5 | 27.2 |

| MultiIF | 72.2 | 66.3 | 77.3 |

| MMLU-ProX | 73.1 | 61.0 | 64.2 |

| INCLUDE | 71.9 | 61.8 | 64.4 |

| PolyMATH | 46.1 | 40.0 | 46.2 |

Now, I know what you’re thinking: “Those are a lot of numbers, Doc.” And you’re right. But here’s the TL;DR: In many cases, the 4B-Thinking-2507 model is nipping at the heels of the 30B model, and in some benchmarks, it’s actually outperforming its bigger sibling. That’s like a level 20 mage out-DPSing a level 60 warrior. Impressive, if a bit suspicious.

Quickstart: Because No One Reads the Manual

The Qwen team has kindly provided a code snippet for getting started. It’s your standard Hugging Face Transformers fare, with a few quirks:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-4B-Thinking-2507"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=32768

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

try:

index = len(output_ids) - output_ids[::-1].index(151668)

except ValueError:

index = 0

thinking_content = tokenizer.decode(output_ids[:index], skip_special_tokens=True).strip("\n")

content = tokenizer.decode(output_ids[index:], skip_special_tokens=True).strip("\n")

print("thinking content:", thinking_content)

print("content:", content)

Yes, you have to parse out the “thinking content” from the actual content. It’s like separating the wheat from the chaff, or the useful loot from the vendor trash. Pro tip: If you see a lot of </think> tags, don’t panic. The model is just being dramatic.

Agentic Use: Tool Time with Qwen-Agent

If you’re into tool calling (and who isn’t these days?), Qwen3-4B-Thinking-2507 plays nicely with Qwen-Agent. You can define tools via MCP configuration files, use built-in tools, or integrate your own. The setup is straightforward, assuming you have a PhD in YAML and a minor in Pythonic incantations.

from qwen_agent.agents import Assistant

llm_cfg = {

'model': 'Qwen3-4B-Thinking-2507',

'model_server': 'http://localhost:8000/v1',

'api_key': 'EMPTY',

'generate_cfg': {

'thought_in_content': True,

},

}

tools = [

{'mcpServers': {

'time': {

'command': 'uvx',

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

},

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}

},

'code_interpreter',

]

bot = Assistant(llm=llm_cfg, function_list=tools)

messages = [{'role': 'user', 'content': 'https://qwenlm.github.io/blog/ Introduce the latest developments of Qwen'}]

for responses in bot.run(messages=messages):

pass

print(responses)

It’s not exactly plug-and-play, but if you’re the type who enjoys configuring your own Linux kernel, you’ll feel right at home.

Best Practices: Because You’re Probably Doing It Wrong

The Qwen team has some advice for getting the most out of this model:

- Sampling Parameters: Temperature=0.6, TopP=0.95, TopK=20, MinP=0. Presence penalty between 0 and 2 to avoid endless repetition (unless you’re into that sort of thing).

- Adequate Output Length: 32,768 tokens for most queries, 81,920 for complex problems. Because size matters.

- Standardize Output Format: Use prompts to standardize outputs, especially for math and multiple-choice questions. Apparently, the model likes structure.

- No Thinking Content in History: In multi-turn conversations, only include the final output part in the history. The model doesn’t need to remember its own internal monologue. Neither do you.

The Verdict: Is It Worth Your Time?

So, after all that, is Qwen3-4B-Thinking-2507 worth your time? In a word: yes. This model punches well above its weight class, often matching or even surpassing the 30B model in certain benchmarks. It’s like watching a rookie healer out-heal the veteran tank in a raid—unexpected, but undeniably impressive.

The long-context capability is a game-changer for anyone dealing with large documents or complex multi-turn conversations. The improved reasoning and tool-calling abilities make it a solid choice for both research and practical applications. And the fact that it’s open-source and relatively easy to deploy (assuming you have the hardware and patience) is the cherry on top.

Of course, it’s not perfect. The setup can be a bit daunting for newcomers, and the constant parsing of “thinking content” feels like busywork. But these are minor quibbles in an otherwise stellar package.

In conclusion, Qwen3-4B-Thinking-2507 is a model that thinks it’s a 30B—and in many ways, it’s right. If you’re looking for a powerful, versatile, and (mostly) user-friendly AI model, this one deserves a spot in your party.

And that, ladies and gentlemen, is entirely my opinion.

Source: Qwen3-4B-Thinking-2507, https://huggingface.co/Qwen/Qwen3-4B-Thinking-2507