AI’s $100K Developer Bill: The Tokenocalypse Nobody Saw Coming

Hello everyone. Today, let’s talk about the impending financial sinkhole that is AI application inference costs – because clearly, nothing screams “the future of accessible technology” like sending your devs an invoice that could buy them a new house.

The Token Tsunami

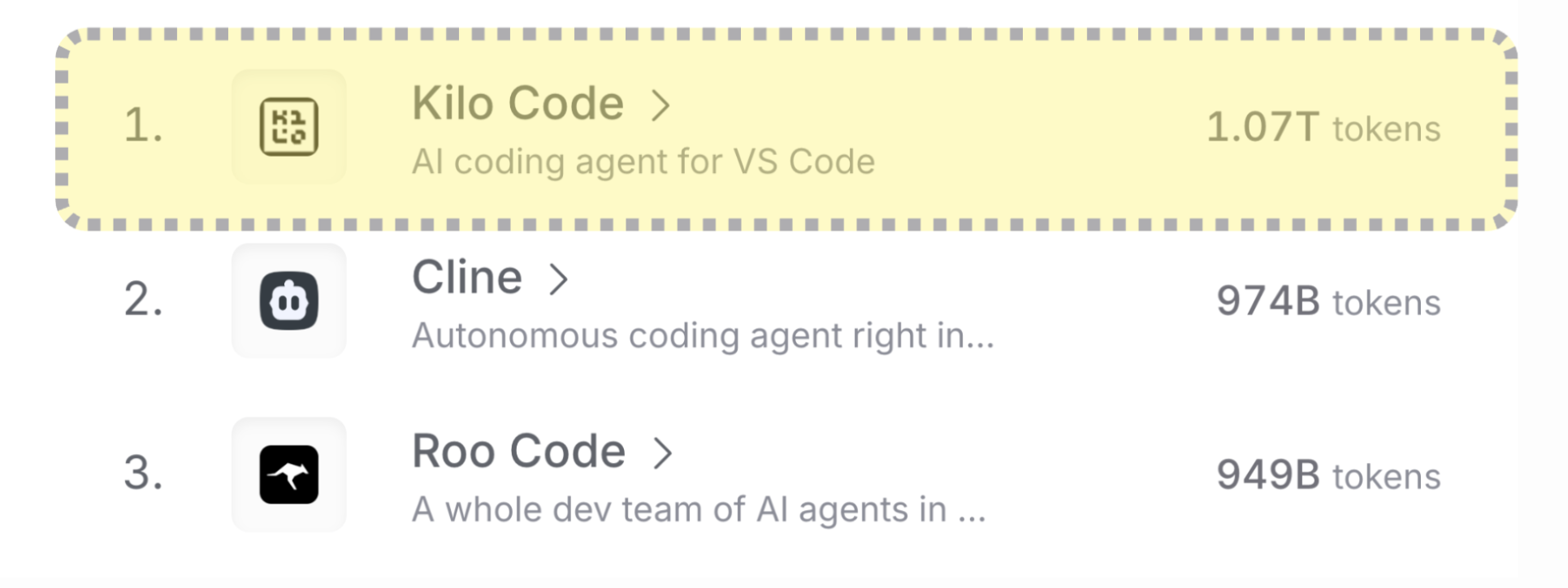

Kilo just smashed through the “one trillion tokens a month” milestone. Yes, trillion. That’s 1,000,000,000,000 little units of AI brain juice, each one costing real, hard currency. The open-source trifecta – Cline, Roo, and Kilo – are sprinting ahead, helped along mainly because Cursor and Claude decided: “You know what would make users love us? Let’s throttle them like it’s dial-up internet in 1998.”

The industry made a gloriously misguided bet. They figured dropping raw inference costs would mean application inference would follow suit, which is like assuming that cheaper potatoes mean free French fries at a five-star restaurant. The result? Startups throwing out gold-plated subscription plans at massive losses in the hope that costs would crash. They didn’t. Instead, costs went full Skyrim-draugr-rising-from-the-coffin: up and relentless.

Why Costs Exploded (Spoiler: Math and Bad Assumptions)

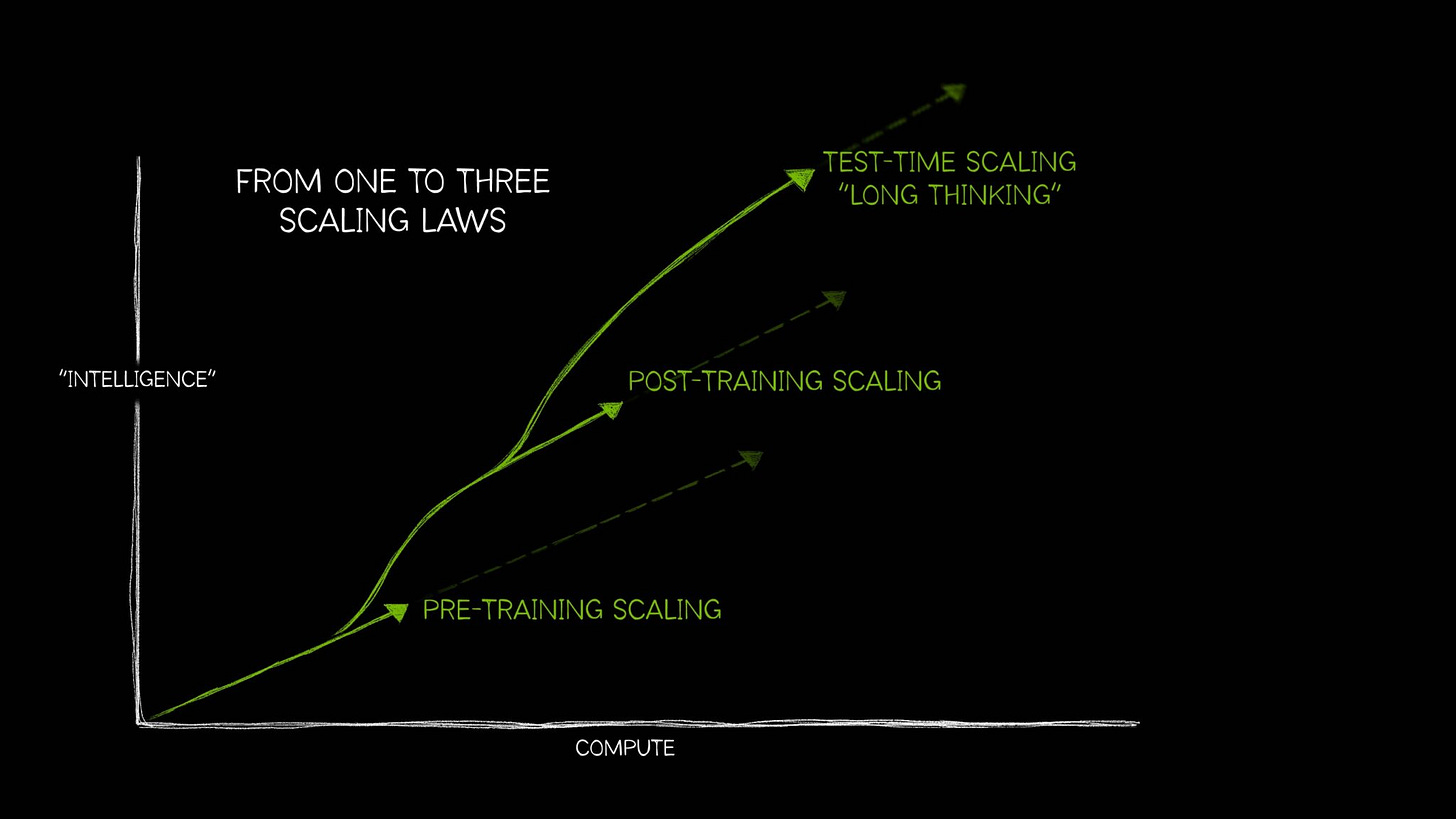

The per-token price of frontier models stayed locked – and token consumption ballooned faster than an MMO loot inflation scandal. Why? Test-time scaling: models “thinking more” during inference, chucking 100x the compute at hard problems. Turns out, the smarter the AI pretends to be, the more it blows through tokens like a toddler with a bag of Skittles.

And then the apps themselves started gulping down tokens because “big suggestions” became the status symbol of modern coding. The result? Costs multiplied by 10 over just two years. Cursor’s $20 plan is now a memory, replaced with a $200 tier that’s somehow considered “standard” – and yes, Claude Code followed suit faster than a suspiciously well-timed Steam sale.

The $200 Throttle Club

Even if you cough up $200 a month, heavy users get the AI equivalent of a slap on the wrist: rate limiting, context compression, and suddenly being handed bargain-bin models. Essentially: “Thanks for your money, now please enjoy this low-poly NPC brain.”

Open source tools like Cline, Roo, and Kilo, on the other hand, promise “never throttle the user” – which is refreshing, like finding a healer in your raid who actually knows the mechanics. They also give you a toolbox to cut costs, from splitting work into micro-quests to Frankensteining open/closed-source models together. You can even pull the plug on a hallucinating model mid-run – like mercifully closing a failing surgery before the patient sprouts a tail.

- Split work into smaller, efficient tasks.

- Use AI modes for different job roles: Orchestrator, Architect, Code, Debug.

- Mix open-source for code, closed-source for architecture.

- Improve and optimize prompts before sending.

- Optimize memory and context banks like a digital hoarder.

- Enable caching – because why pay twice for the same mistake?

- Terminate runs before the AI veers into “talking to ghosts” territory.

Prepare for the $100K Apocalypse

The article paints a grim but unsurprising future: power users’ annual AI bills could soon hit $100K. Not because of malice (mostly), but because of parallel agents running wild and models doing more work without asking you for a coffee break. It’s the MMO raid boss problem – the more DPS you have, the faster you burn through resources.

So yes, $100K a year for inference is coming. And in the inevitable corporate meeting where someone asks “Is this worth it?” the answer will be “Well… cheaper than hiring five humans, I guess.”

Oh, And Training Engineers Make Everyone Else Look Poor

If you think $100K for inference is bad, look at training. Frontier AI labs are throwing $100 million salaries and massive compute budgets at “training engineers.” Meanwhile, inference engineers are the working-class NPCs of this economy, toiling in the mines for their six-figure total expense sheets, while a handful of people call the shots on global AI capability.

But of course, the disparity exists for a reason: one model training run can ripple out to millions of users, like some kind of digital butterfly effect – except instead of causing rainstorms in Brazil, it causes CEOs in Silicon Valley to buy their third yacht.

Final Verdict

So where do I fall on this? The tech is exciting, the use cases are incredible, and the potential productivity gains are undeniable – but the economic model feels like Blizzard-level monetization wrapped in VC-funded idealism. If you aren’t ready to bleed tokens (and by extension, cash), you might want to back away slowly and reconsider whether you’re building in AI for its benefits or for the bragging rights. Right now, the infrastructure feels less like the dawn of a utopian AI age and more like a cleverly disguised slot machine.

And that, ladies and gentlemen, is entirely my opinion.

Article source: Future AI bills of $100k/yr per dev